Tenant Satisfaction Perception Survey Design Approach for 2024/25

Approach Rationale

We take a ‘look twice’ approach to our customer satisfaction survey results: looking once to improve things for the individual customer affected; and a second time to identify wider patterns that can improve things for our business and wider customer base. As such, we took a sample-based approach rather than a census approach, and a phased approach of surveying continuously across the year, rather than as a one-off annual activity. We took this approach as we believe this steady and manageable stream of feedback to be the most effective way to comprehensively listen and respond to the feedback received, providing the most benefit to both the customers responding and our business. Additionally, although satisfaction statistics are important, we believe the greatest value from these surveys is in uncovering the reasons behind the figures; understanding, and learning from, the customer’s experience. As such, we chose to take a primarily telephone-based approach to our surveys , allowing us to build a rapport with the respondents and delve deeper into their perceptions of us to gain additional and actionable insight for improvement. We also know this to be the most inclusive method to surveying our tenants, as we have missing email and mobile phone data for some of our lead tenants currently.

The TSM surveying activity is undertaken by our dedicated Insight Assistant, and no external contractors are used. No incentives are offered to take part.

Methodology

Sampling or Census

Due to the size of our housing stock, we took a sampling approach in line with the TSM guidance requirements. Due to our current stock of shared ownership properties being <1000, we are only required to survey those in our rented accommodation . Each household must not be represented more than once per year.

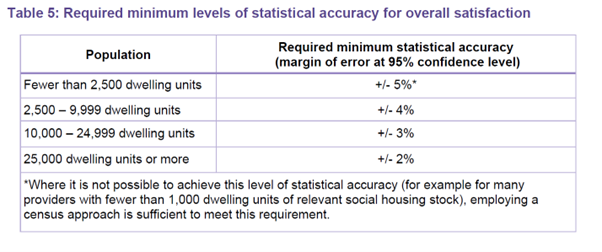

In line with the required minimum levels for statistical accuracy (as shown in the table below), ensure that our sample size is calculated each year to be statistically significant and provide results with a 95% confidence interval of +/- 4%. To do this, we take a random sample of our households as our sampling frame to invite to survey each month, to achieve responses from at least 47 customers per month (reviewed annually to reflect changes in stock numbers). Our total number of customers surveyed this year exceeded our target of 564, with a total of 706 responses achieved. We utilised the ‘random selection’ filter within the Call Manager function to allow us to randomly select the tenants to invite.

2. This is reviewed annually. Aspire current shared ownership stock circa 500.

We took a stratified random sampling approach, dividing households into subgroups based on their tenancy start date. Each month, our random sample selected from only those with tenancy start dates from a specified calendar month. This was to ensure that all households had an equal chance of being selected across the course of the year and prevented the same household from being surveyed more than once per year. In any particular month, we sampled only those households with a tenancy start month of 5 months prior. For example, in September we surveyed households with a tenancy start month of April. This was to prevent over-surveying or under-surveying of our newest tenants (i.e. those with us less than 12 months). Matching surveying calendar month exactly to tenancy start date calendar month would have meant surveying these tenants immediately at move in (clashing with our new tenant survey) or at their 1 year anniversary (excluding the voices of any tenants with us for less than a year). Having a 5-month lag meant that these customers had a gap between these survey invites, and also meant that tenants with us less than a year still got the opportunity to be surveyed. No households were excluded from the sampling frame due to exceptional circumstances.

Data Collection

We utilise software called ‘CX Feedback’ for customer surveys, which allows us to track responses and complete surveys by a combination of telephone, text and email. Due to our primary preference towards telephone (discussed in section 1 ‘Rationale’, above) we took the following approach to collecting the survey responses:

- Invite attempt 1 – phone

- Invite attempt 2 – phone

- Invite attempt 3 – text/email (dependent on available contact data and recorded preferences)

We believe that the combination of multiple attempts, combined with multiple completion methods, provides fair and balanced opportunity to participate. This approach was successful last year, and we remain consistent with the same approach.

When the telephone calls were made, the customer was also be given the option of having the survey sent to them for self-completion via text or email if they preferred. The customer was also offered a call back for telephone completion at a time more preferred to them if this was more suitable for them.

All surveys were be collected through the survey built in the CX Feedback software, and the data stored there securely.

Survey Design

The survey questions included are the 12 questions required by the TSM regulation. In addition to these, we included open text comment boxes after some questions to record additional insight and reasons behind the answers given. A further measure capturing satisfaction with value for money of rent was also included, which we previously collected through STAR and is used by the Finance team for various reports.

At the end of the survey we include a question to establish whether they consent to being re-contacted regarding any issues they raised.

A survey introduction page was designed, with wording matching the requirements of page 8 of TSM guidance Annex 5. This also includes a link to our privacy statement, and a statement that the responses are confidential and responses will not affect the customer’s tenancy. Within the thank you message, the customer is also provided with details of how to make a complaint if they feel dissatisfied with our services. This was offered out verbally for those completing the survey via telephone call.

Equalities and Inclusion

It is important that we make participation in the survey as accessible as possible. The below details potential identified barriers to participation, and actions we took to reduce these:

- Language – Telephone researcher to speak in plain English and clearly. All written instructions to be written in plain English. ‘Read aloud’ functionality included in the survey design for those self-completing.

- Visual impairment – Telephone survey completion reduces this barrier. Font size of 14 or above used throughout the survey. ‘Read aloud’ functionality included in the survey design for those self-completing.

- Literacy – Telephone survey completion reduces this barrier. All written instructions to be written in plain English. ‘Read aloud’ functionality included in the survey design for those self-completing.

- Lack of access to digital – Telephone survey completion reduces this barrier. Options to send by text or email too.

Customer Representation

It is a requirement of the TSM regulation to ensure that the survey results are representative of our customer population. This includes the following characteristics as identified by the TSM guidance: stock type; age; ethnicity; building type; household or property size; and geographical area.

To do this, we took the following approach:

- A review of our internal customer data, identifying data quality gaps and driving forward an action plan to improve them

- Utilising the ‘representation monitoring’ dashboard provided within CX Feedback, which compared the characteristic breakdown of the respondents compared to the target population. Customer characteristics to monitor included, but were not limited to: stock type; age; ethnicity; building type; household or property size; geographical area.

- Utilised this insight to target under-represented groups where needed - These response representation dashboards were reviewed fortnightly to ensure that our sample continued to be representative of our wider tenant population. Where groups started to become under-represented, these were spotted early and sampling was further targeted at those underrepresented groups to increase numbers and redress the balance. Being proactive and monitoring these on a fortnightly basis meant that we successfully achieved a representative sample and did not require the use of weighting in our results.

Data Protection

An initial data protection assessment was carried out in Jan 2021 for our CX Feedback surveying software and activity. This was reviewed in Jan 2025 to also include our additional module to the software ‘Engagement Plus’, and was signed off by the Director of Corporate Services and the DPO. This will be reviewed every 3 years.

Customer response data is kept confidential and consent is gathered through the survey as to whether they are happy to be re-contacted regarding any issues raised.

When conducted by telephone, customers are asked at the beginning if they consent to take part. Customers who receive a self completion questionnaire can choose whether or not to complete the survey by clicking on the attached link. A link to Aspire’s data privacy statement is included at the top of every survey.

Access to CX Feedback is secure and requires individual log in, with accounts set up and managed by the Business Intelligence team. Permission settings are utilised within the CX Feedback software to control the level of information users have access to. This is controlled by the software administrators within the Business Intelligence team.

Dissemination and Sharing of Results

Internal Reporting

Results and performance are shared internally on a monthly basis through the Performance reports. These are published on our internal Insight Hub for the whole organisation to access, and also shared upwards to leadership and Board through the usual reporting channels. The corporate KPIs were updated for the 23/24 financial year to include the TSMs and continue to be reviewed annually, to improve transparency and accountability within leadership and the wider business.

External reporting

As required by the regulator, results are submitted annually to the regulator by the Business Intelligence team alongside the other measures collected.

Results are published annually to our customers and stakeholders via the Aspire website and any other methods the Communications team deem appropriate. The style of these publications follow the Aspire comms style guide for accessibility and is written in plain English.